Understanding the AI Act: What Every Company Needs to Know Today

- apuntiturull

- Dec 6, 2025

- 5 min read

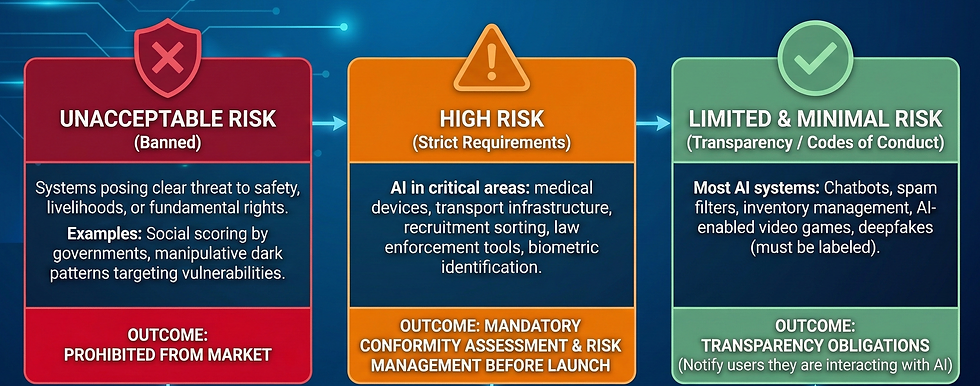

Europe has launched the world’s first full regulatory framework for artificial intelligence. The AI Act is bold, ambitious, and unavoidable. Whether you’re experimenting with ChatGPT, integrating third-party AI tools, or building your own AI products, the regulation applies to you.

What many companies don’t realise is that since 1 August 2024, the AI Act is already in force. That doesn’t mean every obligation applies overnight - there is a phased timeline, but the countdown has started: some prohibited practices will be banned within months, and stricter rules for high-risk systems will follow.

So what are the consequences today? Do you need to take action already, even if you “only” use ChatGPT internally?

This guide is designed to answer exactly that: what matters now, what can wait, and how to avoid waking up in two years with a compliance problem you could have solved in days.

What is AI Act?

The AI Act is a legislative proposal of the European Union that regulates the use of artificial intelligence. The law doesn’t really care whether you’re using ChatGPT, a small in-house model, or a third-party API.

What truly matters is your role and what you’re doing with the technology.

The regulation draws a sharp line between two players:

The provider — the one who builds, trains, or packages the AI system.

If you touch the engine, customise the model, or distribute a solution, you’re playing in the big league of compliance.

The deployer — the one who uses AI inside the organisation.

If you rely on tools created by others, you’re a deployer. Fewer obligations, yes, but still clear responsibilities.

And here is the part most companies overlook:

The risk doesn’t come from the model — it comes from the use case.

A chatbot that explains your holiday policy? Minimal risk.

A classification tool for documents? Innocent.

A classification tool that triages patients or candidates? Strictly regulated.

The message is simple:

the AI Act forces companies to examine not just the technology, but the intention and the impact behind it.

So before asking “what do we need to comply with?”, ask yourself two questions that determine everything that comes next:

What role do we play — provider or deployer?

What exactly are we using AI for — and who is affected by those decisions?

From here, the entire regulatory logic unfolds: obligations, transparency, documentation, oversight, and—yes—risk.

FAQ about AI Act

“I have no idea what the AI Act is. What does it regulate?”

The AI Act regulates how AI systems must be designed, deployed, and supervised in the EU.

It defines what counts as an AI system (Art. 3), sets rules for both developers and users, and ensures transparency, safety, and human oversight.

It applies to any organisation that uses or provides AI within the EU.

Reference: AI Act Art. 3

“What happens if a company does not comply with the AI Act?”

The AI Act is not a theoretical document — it comes with a very real sanctioning regime. Fines can be extremely high, especially for medium and large companies:

Prohibited practices: up to €35 million or 7% of global annual turnover, whichever is higher.

Serious infringements: up to €15 million or 3% of turnover.

Providing misleading information to authorities: up to €7.5 million or 1% of turnover.

For SMEs and startups, the lower amount applies, but the consequences can still be severe: project shutdowns, loss of clients, and barriers to entering public or corporate procurement processes.

“We only use ChatGPT internally. Does the AI Act affect us?”

Yes — but lightly. You are considered a user, not a developer.

Your main obligations are to:

use the tool responsibly,

avoid uploading sensitive or personal data,

apply basic supervision and verification,

create an internal policy for generative AI use.

You do not need certifications or audits at this stage.

“We use several AI tools from third-party vendors. What does the AI Act require from us?”

You become a responsible deployer, meaning you must:

ensure the vendor provides clear usage instructions and limitations,

train your staff,

monitor the system for errors,

ensure the data you feed the system is appropriate,

report incidents if something goes seriously wrong.

Using AI is not passive: the company remains accountable.

“What practical changes should my company expect?”

Expect to:

prepare document how and where you use AI,

define internal AI policies,

increase transparency in AI-driven processes,

implement human oversight where relevant,

verify your vendors,

prepare basic evidence of compliance.

Organisations that develop their own AI must additionally implement governance, quality controls, and documentation structures.

"What Early-Stage AI Users Really Need to Know"

If you are experimenting with generative AI, your main responsibilities are:

protecting data,

supervising outputs,

guaranteeing ethical usage,

ensuring transparency with users or employees affected.

No heavy compliance required — yet.

How Somia Helps Companies Comply with the AI Act

Somia’s core mission is simple:

give you a platform where AI Act compliance is already built in, so you can focus on creating value — not navigating regulation.

You use the platform knowing that transparency, traceability, and oversight are pre-configured.

✔ Automatic logs and traceability

Every interaction, action, and decision is recorded.

You always have a full audit trail ready.

✔ Human validation whenever you need it

Whether it’s a workflow, a document, a message, or a decision, you can enable human approval on demand.

✔ We guide you through defining your use cases

Somia helps you understand, refine, and structure your use cases, and implements them safely inside our platform, aligned with the AI Act’s risk logic.

✔ Works with any AI model

OpenAI, DeepSeek, open-source models…

Somia ensures outputs, logs, and processes meet EU rules, no matter what model sits underneath.

Want to Know More?

If you want to understand how Somia can help your company use AI safely, responsibly, and aligned with the EU AI Act, request a demo.

Beyond private solutions, it’s important to highlight that governments and public institutions also provide support to help companies understand and adopt responsible AI practices.

One initiative we strongly recommend is the Observatori d’Ètica en Intel·ligència Artificial de Catalunya (OEIAC)

The OEIAC is part of the Catalonia.AI strategy of the Government of Catalonia and is dedicated to promoting the ethical and responsible use of AI. Together with the Catalan Government, it has launched the Manifest for Trustworthy AI, reinforcing the commitment to safe, transparent, and legally compliant AI adoption.

Critically for companies, the OEIAC offers a free ethical AI assessment framework, which allows organisations to perform an initial audit of their AI use cases.

This helps businesses understand risks, identify gaps, and align their practices with ethical and regulatory expectations — at no cost.

Conclusions

The arrival of the AI Act should not be seen as a threat, but as an opportunity to improve quality and safety in the use of technology. At Somia, we believe in ethical, controlled, and truly useful artificial intelligence for companies of all sizes.

Having agents that are adapted to the legal environment will allow companies not only to avoid sanctions, but also to gain efficiency, reputation, and long-term sustainability.

Legal Notice

This publication is intended solely for general informational purposes and should not be considered legal, technical, or professional advice. It must not be used as a basis for making decisions or refraining from action regarding specific situations. Any decisions related to AI Act compliance or other applicable regulations should be made with professional advice tailored to the particular circumstances of each organization.

The author assumes no responsibility for any actions taken, or not taken, based on the information contained in this document, nor for any consequences that may arise from its use.